C.O.V.E.R. (Clinician’s Opinions, Views, and Expectations concerning the radiology Report) Study: A University Hospital Experience

Joanna Marie D. Choa, Jan M.L. Bosmans

Apr 2018 DOI 10.35460/2546-1621.2017-0038

INTRODUCTION

Effective communication between radiologists and clinicians through the radiology report is one of the major ways by which radiologists can contribute to the management of patients. According to the American College of Radiology (ACR) Guideline for Communication of Diagnostic Imaging Findings, an effective method of communication should, (a) be tailored to satisfy the need for timeliness, (b) support the role of a diagnostic imager as a physician consultant by encouraging physician-to-physician communication, and (c) minimize the risk of communication errors. [1] The content, length, clarity, and way of delivering reports are vital to the provision of knowledge to referring clinicians.

The present study was undertaken to provide information regarding the effectiveness and quality of reports being produced in the University of Santo Tomas Hospital (USTH), based on the following parameters of a well-composed radiology report: Importance, Clinical correlation, Referrer’s satisfaction, Content, Structure and Style; and to further give insights into regional differences and preferences of physicians from this part of the world, in comparison to results from a pioneer European study.

MATERIALS AND METHODS

This study was approved by the Institutional Review Board of USTH (Protocol Code: IRB-TR-09-2015-126). The survey made use of a printed questionnaire with a printed informed consent form attached. The respondents, before proceeding with the survey, must sign the informed consent, of which they received a copy.

The study is an observational study that employed a specifically designed and tested questionnaire as the data-gathering tool. We made use of the questionnaire from the COVER methodology [2] excluding one question regarding language and one question regarding making a report. We added three questions as follows: two regarding content and one regarding structure and style. The basis for excluding the question about language was because all radiology reports in our country are in English, and consequently, there is no need to translate to the vernacular. We also excluded a question pertaining to the preferences of the radiologist concerning writing reports.

We conducted this single-center study at the University of Santo Tomas Hospital, a tertiary private and academic training institution in the Philippines in the Southeast Asia (SEA) wherein almost all clinicians holding office in the institution practice a subspecialty.

Included in this study were voluntary, nonrandomized clinicians practicing as consultants or as fellows undergoing training in USTH, who order imaging studies and/or read reports coming from the department of radiological sciences, regardless of their age, gender, specialty or years in practice or training. These imaging studies/reports include X-ray, general ultrasound, computed tomography, magnetic resonance imaging, interventional radiology and breast imaging. Reports from obstetrics and cardiovascular sonography were excluded. Radiology consultants and radiology trainees were excluded from the study.

In the first part of the survey, physician’s demographics (age, gender, specialty and years in practice) were recorded. The second part of the survey consisted of forty-one (41) statements. This part was divided into categories based on the following parameters of a radiology report: (1) importance, (2) clinical correlation, (3) referrer’s satisfaction, (4) content, and (5) structure and style. Each category consisted of different number of questions. The questionnaire could be completed in thirty minutes or less. Respondents graded their level of agreement with the statements using a Likert scale consisting of five options: entirely disagree, partly disagree, neutral, partly agree, and entirely agree. In the third part, we gave the respondents an area where they could enter free-text comments, opinions, and/or suggestions for improving the radiology report.

We collated and statistically analyzed the frequency of each response for every statement to come up with the average opinion/view of the clinicians per statement in the questionnaire. The result for each statement was compared with those of the European (EURO) study.

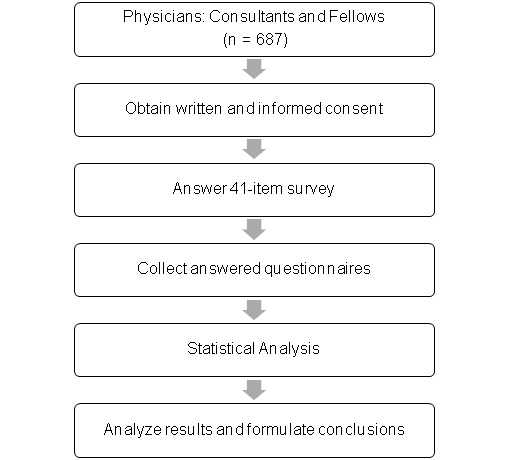

Flow of data acquisition

RESULTS

A total of 283 clinicians participated in this study comprising of 243 consultants and 40 fellows. Respondents’ demographics are in table 1. The complete tabulations are in Tables 2A-F. Statements with no ratings were omitted in the final count. Ninety-eight per cent of the respondents answered each statement of the questionnaire.

On statements regarding Importance

Clinicians believed that the radiology report is an indispensable tool in their work (90.8%, Table 2A) wherein important issues they would have missed themselves were stated (79.4%, Table 2A). Forty-three per cent (122 of 281, Table 2A) from the SEA study agreed that radiologists are better able to interpret imaging studies than themselves compared to 63% in the EURO study. Both SEA (84.8%, Table 2A) and EURO (83.0%, Table 2A) studies showed that clinicians do read the report as soon as it is available and not at the end of the observation or admission period.

On statements regarding Clinical Correlation

In both surveys, the clinicians preferred that radiologists know the patient’s medical condition (SEA 73.1% and EURO 87.0%, Table 2B) and the clinical question (SEA 74.9% and EURO 97.4%, Table 2A) in order to generate a good report. A lower percentage of SEA clinicians (52.3%, Table 2B) disagreed with the statement that radiologist better does not know much about the patient to avoid bias, compared to 85.3% (Table 2B) in the EURO group. Some SEA clinicians (30.4%, Table 2B) felt that clinical correlation should only be done on a case-to-case basis and the information obtained by the radiologist should be indicated in the report so the clinicians can assess potential bias. Both groups agreed that a clear clinical question should be stated when the requested examination is not routine (SEA 73.9% versus EURO 95.4%, Table 2B).

One SEA clinician mentioned that it would be good to speak with the radiologist before reporting, thereby promoting open lines of communication. Clinicians also want radiologists to contact them when they see findings that they cannot understand. Another clinician verbalized that the clinical question should be answered explicitly by the radiologist.

On statements regarding Referrer’s Satisfaction

Both SEA (86.5%, Table 2C) and EURO (71.8%, Table 2C) respondents were satisfied with the reports they receive and had no problem understanding them (65.4% for SEA and 77.5% for EURO, Table 2C). More SEA clinicians (83.0%, Table 2C) than EURO clinicians (50.1%, Table 2C) agreed that the language and style of the radiology reports are mostly clear. More than half of the respondents (62.9%, Table 2C) in the SEA study thought that radiology reports could be more easily understood if common words and expressions are used. The SEA group also believed that radiologists proofread their reports before sending (72.3%, Table 2C) while the EURO group stood on the neutral (52.7%, Table 2C).

When asked if the clinicians think their own reports are better, more concise and more easily understood than the radiologists’, the SEA group responded on the negative, while the EURO clinicians responded positively.

One SEA clinician stated that subspecialization in radiology should be honored; e.g., neuroradiology reports interpreted by neuroradiologists. Another clinician wanted reports to be available sooner, if possible, within an hour or two.

On statements regarding Content

Half of SEA clinicians (50.1%, Table 2D) agreed that simple examinations with no abnormal findings can be presented with a single statement as “no abnormal findings” while the EURO counterparts were not decided. Alternatively, SEA respondents were undecided concerning complex examinations being reported with a simple “no abnormal findings”; most of their EURO counterparts (70.9%, Table 2D) disagreed. Both groups agreed that a conclusion is tantamount at the end of each report (80.9% for SEA and 93.9% for EURO group, Table 2D) that does not merely reiterate findings already mentioned in the descriptive part. If an impression cannot be made, reasons should be stated. Clinicians from the SEA and EURO studies confirmed that they read both the descriptive part of the report and the conclusion (89.4% and 85.8%, respectively, Table 2D).

Half of respondents from both SEA (54.0%, Table 2D) and EURO (50.1%, Table 2D) believed that when a particular organ is not mentioned, it has not been closely looked at. Both studies confirmed that reports should consist of a fixed list of short descriptions of the findings, and that reports should use unambiguous term based on a common, well-defined, standard radiology lexicon.

On statements regarding Structure and Style

The SEA respondents agreed that reports can be presented as free text (51.3%, Table 2E) and in itemized-list form (66.5%, Table 2E). More than half of the EURO study (56.0%, Table 2E) did not approve the prose type report. A clinician from the SEA group commented that he would like short descriptions of separate organs. Both groups wanted complex examinations to have separate headings for each organ system (83.4% for SEA and 84.5% for EURO, Table 2E). Both studies showed that simpler style and vocabulary of radiology reports should be considered for better understanding. Explicit technical details of the examination were wanted in both SEA (73.8%, Table 2E) and EURO (76.5%, Table 2E) studies.

On making a report involving trainees

Clinicians from the SEA study failed to come up with a definite response when asked if making a good report is mainly a matter of talent, while the EURO counterparts rejected the idea (61.9%, Table 2F). Both studies however agreed that making a good report can be learned, and this should be an integral part of radiology training (89.3% for SEA and 92.4% for EURO, Table 2F). Neither SEA nor EURO study did yield a clear result concerning the question whether staff radiologists make better reports than residents. An SEA respondent mentioned that while it may be true that experience is the best teacher, some residents are also good in composing their statements.

DISCUSSION

The radiology report is a vital document for the diagnostic and therapeutic management of the patient. Clinicians agree that radiologists do a better job than themselves interpreting imaging studies. This trust being granted, clinicians believe that radiologists have diligently looked through all the images and exhausted all their skills before producing their report.

Although nobody doubts the importance for the quality of the report of the availability of clinical information in the request, there is less unanimity concerning the necessity of having this information before the images have been studied. (3) One SEA clinician commented that he prefers radiologists to be blinded to the clinical data, to avoid bias in the interpretation. There are, however, several reasons why clinical data are important for a radiologist. Knowing the clinical data can help the radiologist choose the most suitable imaging technique and study protocol and he will be able to interpret the images more specifically in the context of the clinical question. (3) The radiologist can focus on essential aspects for a particular pathology. (3) He can also recommend further imaging if the one carried out is not conclusive. (3) The cost-benefit ratio of examinations can be optimized, also according to the radiologist’s requirements. (3)

The radiologist is exclusively responsible for choosing the type of procedure to be performed, and is therefore both clinically and legally responsible. (3) Where deemed necessary, it is a part of his tasks to recommend appropriate follow-up studies or additional examination to provide a diagnosis, so avoiding useless, costly and potentially harmful additional procedures. (4)

Several clinicians in our study commented that they would like to receive results in the shortest time possible. While this is not possible in the current setting of our institution (no PACS system installed), urgent concerns are promptly communicated with the attending resident and/or consultant via phone calls. This prompt communication with clinicians is as suggested by ACR and encourages discussion on the most appropriate imaging study. It likewise eliminates potential misunderstandings in the report. Adding a group phone number, an email address or a link to the radiology group’s website at the end of the report is also suggested. (5)

Another concern of clinicians that needs to be addressed is their preference for system- or specialty-based interpretation of reports. Our institution practices modality-based interpretation, except in breast and musculoskeletal imaging. This preference for another approach should be taken into consideration when planning further development of radiology practice in the Philippines.

Almost two thirds of clinicians from the EURO group, a clear majority, believe that radiologists are better able to interpret imaging studies from their own specialty than they can themselves, which clinicians in the SEA study proved undecided about this issue. It is unclear why this is the case.

The SEA respondents still prefer to receive reports in prose type, as opposed to the EURO clinicians who rejected the idea. A possible explanation might be that most hospitals in the region are switching to digital imaging and PACS later than the countries in the EURO study, and that consequently, they have not seen alternatives for the prose report. Yet, the SEA clinicians did not totally exclude the idea of receiving an itemized report.

Many institutions are considering switching to structured reporting (SR) for creating radiology reports, which implies the use of a preformatted reporting model (a template). Itemized reporting (7) and tabular reporting (8) can be considered specific types of structured reporting. The question how reports should be structured has been the subject of an ongoing discussion. The RSNA has tried to resolve this issue by developing a library of reporting templates where every member of RSNA and European Society of Radiology (ESR) can add his own to; only the underlying principles these templates have to follow have been defined. That way, the personal preferences of radiologists can be respected, while their templates maintain a format that makes them fit for integration into future PACS/RIS systems. Despite these efforts, the acceptance of SR in daily practice is a very slow process. (3) And due to the very personal and distinct style of each radiologist, no standard method of reporting is universally accepted.

Traditionally, reporting has been taught through the passing on of knowledge by senior consultants to juniors and residents. Most centers do not have a formal course or training program on how to report. Nonetheless, the radiology report, being the final and most conspicuous product of a radiologist’s many years of training, should reflect her or his competence and expertise. (8) The majority of both SEA and EURO respondents agreed that learning to report should be an integral part of the residency training program. A well-made report also inspires trust and confidence in the referring clinician. Learning to report by exercise, under the supervision of teaching experts/professionals, is highly advisable.

The interpretation of the use of a Likert scale poses particular problems. Some statisticians argue that a Likert scale, being an ordinary scale, does not produce results that can be treated as numerical values. This criticism is not shared by most authors, and countless studies do contain calculations based on such results. For reasons of consistency and comparison, we preferred to adhere to the ‘general election principle’ introduced in the EURO C.O.V.E.R. study, in which the addition of total and partial (dis)agreements was translated into YES/NO/NEUTRAL/UNDECIDED results. Furthermore, the results of this study reflect the preferences of referring clinicians in just one teaching hospital in the Philippines. Additional studies in other medical centers in Southeast Asia are required to verify if the results can be applied to other hospitals in the region.

CONCLUSION

The radiology reports generated in USTH were deemed acceptable by referring clinicians and remain an essential part of proper care and management of patients. Several areas of improvement were identified that can make our reports more effective. Learning on how to report should be a part of the training of radiology residents.

- American College of Radiology. ACR practice guideline for communication of diagnostic imaging findings. Practice guidelines & technical standards. 2005.

- Bosmans JM. The radiology report: from prose to structured reporting and back again? PhD dissertation. Universiteit Antwerpen (Belgium); 2011.

- Grigenti F. Radiological reporting in clinical practice. New York: Springer; 2008.

- Hall FM. Language of the radiology report: primer for residents and wayward radiologists. AJR Am J Roentgenol. 2000 Nov; 175(5):1239-42.

- Gunn AJ, Mangano MD, Choy G, Sahani DV. Rethinking the role of the radiologist: enhancing visibility through both traditional and nontraditional reporting practices. Radiographics. 2015 Mar 12; 35(2):416-23.

- RSNA Informatics Reporting. [cited 20 April 2015]. Available from: http://radreport.org/about.php

- Cramer JA, Eisenmenger LB, Pierson NS, Dhatt HS, Heilbrun ME. Structured and templated reporting: an overview. Appl Radiol. 2014; 43(8):13.

- Grieve FM, Plumb AA, Khan SH. Radiology reporting: a general practitioner's perspective. Br J Radiol. 2010 Jan; 83(985):17-22.

- Bosmans JM, Neri E, Ratib O, Kahn Jr CE. Structured reporting: a fusion reactor hungry for fuel. Insights Imaging. 2015 Feb 1; 6(1):129-32.

- Bosmans JM, Peremans L, De Schepper AM, Duyck PO, Parizel PM. How do referring clinicians want radiologists to report? Suggestions from the COVER survey. Insights Imaging. 2011 Oct 1; 2(5):577-84.

- Bosmans JM, Weyler JJ, De Schepper AM, Parizel PM. The radiology report as seen by radiologists and referring clinicians: results of the COVER and ROVER surveys. Radiology. 2011 Apr; 259(1):184-95.

- Bosmans JM. Communication with other physicians – is there a need for structured reports?. Lecture presented at; 2013; MIR, Barcelona

- Keen CE. Radiology Reports: What do physicians really want?. 2009 [cited 22 February 2015]. Available from: http://www.auntminnie.com/ index.aspx?sec=prtf&sub=def&pag=dis&ItemId=88676&printpage=true&fsec=rca&fsub=rsna_2008

- Keen CE. What clinicians want from radiology reports. 2011 [cited 20 September 2014]. Available from: http://www.auntminnieeurope.com/ index.aspx?sec=ser&sub=def&pag=dis&ItemID=605367

- Morgan TA, Helibrun ME, Kahn Jr CE. Reporting initiative of the Radiological Society of North America: progress and new directions. 2014 642-645

- Raymond J, Trop I. The practice of ethics in the era of evidence-based radiology. Radiology. 2007 Sep;244(3):643-9.

- Kahn Jr CE, Langlotz CP, Burnside ES, Carrino JA, Channin DS, Hovsepian DM, Rubin DL. Toward best practices in radiology reporting. Radiology. 2009 Sep;252(3):852-6.

- Naik SS, Hanbidge A, Wilson SR. Radiology reports: examining radiologist and clinician preferences regarding style and content. AJR Am J Roentgenol. 2001 Mar;176(3):591-8.

DISCLOSURE AND CONFLICT OF INTEREST

The authors declared no conflict of interest that may inappropriately influence bias in the execution of research and publication of this scientific work. Both authors have nothing to disclose.

AUTHORS CONTRIBUTIONS

JMDC and JMLB performed the literature search and wrote the manuscript. JMDC performed the actual survey of the clinicians. Both JMDC and JMLB analyzed the data. Both authors have read, critically reviewed, and approved the final manuscript.

|

Table 1. Demographics of the Clinicians (consultants and fellows) who participated in the study (n = 283) |

||

|

Gender Male Female Undisclosed |

145 (51%) 135 (48%) 3 (1%) |

|

|

Age (in years) |

28 – 83 (mean 47) |

|

|

Subspecialties Anesthesiology Internal Medicine Neurology Nuclear Medicine OB-Gynecology Ophthalmology Otorhinolaryngology Pediatrics Radiation Oncology Rehabilitation Medicine Surgery Undisclosed |

Consultants |

Fellows |

|

10 81 12 2 16 5 12 37 1 3 63 1 |

0 25 1 0 3 0 0 10 0 0 1 0 |

|

Table 2A-F. Tally of statements with the corresponding responses and comparison with the European C.O.V.E.R. Study

|

2A. On Importance |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. The radiology report is an indispensable tool in my medical work. |

4 (1.4) |

11 (3.9) |

15 (5.3) |

11 (3.9) |

256 (90.8) |

113 (40.1) |

143 (50.7) |

282 (100) |

YES (90.8) |

YES (87.0) |

|

2. I am better able to interpret an imaging study from my own specialty than the radiologist. |

39 (13.9) |

83 (29.5) |

122 (43.4) |

60 (21.4) |

99 (35.2) |

81 (28.8) |

18 (6.4) |

281 (100) |

UNDE-CIDED |

NO (63.0) |

|

3. The radiology report often mentions important issues I would not have noticed myself on the images. |

2 (0.7) |

20 (7.1) |

22 (7.8) |

36 (12.8) |

224 (79.4) |

154 (54.6) |

70 (24.8) |

282 (100) |

YES (79.4) |

YES (58.9) |

|

4. I read a radiology report as soon as it is available. |

5 (1.8) |

17 (6.0) |

22 (7.8) |

21 (7.4) |

240 (84.8) |

98 (34.6) |

142 (50.2) |

283 (100) |

YES (84.8) |

YES (83.0) |

|

5. I only read a radiology report at the end of the hospital stay or the observation period. |

153 (54.4) |

81 (28.8) |

234 (83.3) |

22 (7.8) |

25 (8.9) |

23 (8.2) |

2 (0.7) |

281 (100) |

NO (83.3) |

NO (79.4) |

|

6. I often do not read the radiology report. |

177 (62.8) |

70 (24.8) |

247 (87.6) |

21 (7.4) |

14 (5.0) |

12 (4.3) |

2 (0.7) |

282 (100) |

NO (87.6) |

NO (84.6) |

|

7. The content of a radiology report is not important, since it is hardly read by anyone. |

193 (68.2) |

60 (21.2) |

253 (89.4) |

18 (6.4) |

12 (4.2) |

10 (3.5) |

2 (0.7) |

283 (100) |

NO (89.4) |

NO (96.3) |

|

2B. On Clinical Correlation |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. In order to make a good report, the radiologist has to know the medical condition of the patient. |

17 (6.0) |

28 (9.9) |

45 (15.9) |

31 (11.0) |

207 (73.1) |

95 (33.6) |

112 (39.6) |

283 (100) |

YES (73.1) |

YES (87.0) |

|

2. In order to make a good report, the radiologist has to know what the clinical question is. |

11 (3.9) |

30 (10.6) |

41 (14.5) |

30 (10.6) |

212 (74.9) |

105 (37.1) |

107 (37.8) |

283 (100) |

YES (74.9) |

YES (97.4) |

|

3. It is better that the radiologist does not know much about the patient, in order to avoid bias. |

59 (20.8) |

89 (31.4) |

148 (52.3) |

49 (17.3) |

86 (30.4) |

71 (25.1) |

15 (5.3) |

283 (100) |

NO (52.3) |

NO (85.3) |

|

4. Any physician who requests a radiological examination that is not part of any routine should state a clear clinical question. |

11 (3.9) |

29 (10.2) |

40 (14.1) |

34 (12.0) |

209 (73.9) |

127 (44.9) |

82 (29.0) |

283 (100) |

YES |

YES (95.4) |

|

2C. On Referrer’s Satisfaction |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. Generally, I am satisfied with the reports I receive. |

3 (1.1) |

17 (6.0) |

20 (7.1) |

18 (6.4) |

244 (86.5) |

181 (64.2) |

63 (22.3) |

282 (100) |

YES (86.5) |

YES (71.8) |

|

2. Not taking into account radiological slang, I often have trouble understanding what the radiologist means. |

53 (18.7) |

132 (46.6) |

185 (65.4) |

50 (17.7) |

48 (17.0) |

46 (16.3) |

2 (0.7) |

283 (100) |

NO (65.4) |

NO (77.5) |

|

3. The language and style of radiology reports are mostly clear. |

0 (0.0) |

14 (4.9) |

14 (4.9) |

34 (12.0) |

235 (83.0) |

162 (57.2) |

73 (25.8) |

283 (100) |

YES (83.0) |

YES (50.1) |

|

4. A radiology report can be read more easily if the radiologist uses common words and expressions instead of medical slang. |

13 (4.6) |

41 (14.5) |

54 (19.1) |

51 (18.0) |

178 (62.9) |

117 (41.3) |

61 (21.6) |

283 (100) |

YES (62.9) |

UNDE-CIDED |

|

5. In a radiology report, simple things are often said in a complicated way. |

18 (6.4) |

103 (36.4) |

121 (42.8) |

65 (23.0) |

97 (34.3) |

83 (29.3) |

14 (4.9) |

283 (100) |

UNDE-CIDED |

NO (57.4) |

|

6. One should be able to understand a radiology report without great effort. |

0 (0.0) |

14 (4.9) |

14 (4.9) |

27 (9.5) |

242 (85.5) |

109 (38.5) |

133 (47.0) |

283 (100) |

YES (85.5) |

YES (87.8) |

|

7. Radiologists proofread their reports thoroughly before they are being sent. |

1 (0.4) |

28 (9.9) |

29 (10.3) |

49 (17.4) |

204 (72.3) |

127 (45.0) |

77 (27.3) |

282 (100) |

YES (72.3) |

NEU-TRAL (52.7) |

|

8. It is the responsibility of the radiologist to adapt his style and choice of words to the level of the clinician. |

23 (8.1) |

49 (17.3) |

72 (25.4) |

64 (22.6) |

147 (51.9) |

113 (39.9) |

34 (12.0) |

283 (100) |

YES (51.9) |

YES (92.3) |

|

9. My reports can be understood without effort. |

64 (22.8) |

163 (58.0) |

227 (80.8) |

40 (14.2) |

14 (5.0) |

14 (5.0) |

0 (0.0) |

281 (100) |

NO (80.8) |

YES |

|

10. My reports are concise. |

62 (22.2) |

171 (61.3) |

233 (83.5) |

30 (10.8) |

16 (5.7) |

16 (5.7) |

0 (0.0) |

279 (100) |

NO (83.5) |

YES (71.4) |

|

2D. On Content |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. When a simple examination (e.g.: a chest x-ray) does not show anything abnormal, the report can be limited to a mere: “No abnormal findings.” |

42 (14.8) |

64 (22.6) |

106 (37.4) |

33 (11.7) |

144 (50.9) |

94 (33.2) |

50 (17.7) |

283 |

YES (50.1) |

UNDE-CIDED |

|

2. When a complex examination (e.g.: an ultrasonography of the abdomen) does not show anything abnormal, the report can be limited to a mere: “No abnormal findings.” |

58 (20.5) |

68 (24.0) |

126 (44.5) |

28 (9.9) |

129 (45.6) |

88 (31.1) |

41 (14.5) |

283 (100) |

UNDE-CIDED |

NO (70.9) |

|

3. A radiology report that is longer than a few lines should end with a conclusion. |

9 (3.2) |

15 (5.3) |

24 (8.5) |

30 (10.6) |

228 (80.9) |

118 (41.8) |

110 (39.0) |

282 (100) |

YES (80.9) |

YES |

|

4. I usually only read the conclusion of a radiology report. |

98 (34.8) |

85 (30.1) |

183 (64.9) |

22 (7.8) |

77 (27.3) |

63 (22.3) |

14 (5.0) |

282 (100) |

NO (64.9) |

NO (66.8) |

|

5. The descriptive part of a report should also be read, not only the conclusion. |

4 (1.4) |

16 (5.7) |

20 (7.1) |

10 (3.5) |

252 (89.4) |

96 (34.0) |

156 (55.3) |

282 (100) |

YES (89.4) |

YES (85.8) |

|

6. If a radiologist does not mention a particular organ or body part, he will not have looked at it closely. |

14 (5.0) |

69 (24.8) |

83 (29.9) |

45 (16.2) |

150 (54.0) |

118 (42.4) |

32 (11.5) |

278 (100) |

YES (54.0) |

YES (50.1) |

|

7. Even if the report is short, I assume the radiologist will have looked at the examination thoroughly. |

5 (1.8) |

31 (11.0) |

36 (12.8) |

37 (13.1) |

209 (74.1) |

122 (43.3) |

87 (30.9) |

282 (100) |

YES (74.1) |

YES (80.3) |

|

8. The report should conclude with an impression of the pathology being described, not merely reiterating the findings.

|

0 (0.0) |

14 (5.0) |

14 (5.0) |

34 (12.1) |

234 (83.0) |

126 (44.7) |

108 (38.3) |

282 (100) |

YES (83.0) |

N/A |

|

9. A report should consist of a fixed list of short descriptions of the findings. |

1 (0.4) |

13 (4.6) |

14 (5.0) |

38 (13.6) |

228 (81.4) |

140 (50.0) |

88 (31.4) |

280 (100) |

YES (81.4) |

YES (54.4) |

|

10. I would prefer radiologists to use only unambiguous terminology, based on a common, well-defined radiology lexicon. |

2 (0.7) |

27 (9.6) |

29 (10.4) |

24 (8.6) |

227 (81.1) |

112 (40.0) |

115 (41.1) |

280 (100) |

YES (81.1) |

YES (67.4) |

|

2E. On Structure and Style |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. A report should consist of prose, like a composition |

12 (4.3) |

59 (21.1) |

71 (25.4) |

65 (23.3) |

143 (51.3) |

108 (38.7) |

35 (12.5) |

279 (100) |

YES (51.3) |

NO (56.0) |

|

2. The report should be written in an itemized-list form |

6 (2.1) |

41 (14.6) |

47 (16.7) |

47 (16.7) |

187 (66.5) |

142 (50.5) |

45 (16.0) |

281 (100) |

YES (66.5) |

N/A |

|

3. When reporting complex examinations (CT, MRI, US…) it is better to work with separate headings for each organ system. |

0 (0.0) |

10 (3.5) |

10 (3.5) |

37 (13.1) |

236 (83.4) |

136 (48.1) |

100 (35.3) |

283 (100) |

YES (83.4) |

YES (84.5) |

|

4. The simpler the style and vocabulary of a radiology report, the better the message will be understood. |

1 (0.4) |

12 (4.2) |

13 (4.6) |

31 (11.0) |

239 (84.5) |

124 (43.8) |

115 (40.6) |

283 (100) |

YES (84.5) |

YES (70.6) |

|

5. The style of radiology reports is mostly pleasant. |

0 (0.0) |

13 (4.6) |

13 (4.6) |

39 (13.8) |

231 (81.6) |

167 (59.0) |

64 (22.6) |

283 (100) |

YES (81.6) |

UNDE-CIDED |

|

6. In CT and MRI reports, the technical details of the examination should be mentioned explicitly. |

6 (2.1) |

36 (12.8) |

42 (14.9) |

32 (11.3) |

208 (73.8) |

122 (43.3) |

86 (30.5) |

282 (100) |

YES (73.8) |

YES (76.5) |

|

7. Clinical information, the clinical question, the descriptive part of the report, the conclusion and remarks should be put into separate paragraphs. |

1 (0.4) |

7 (2.5) |

8 (2.8) |

17 (6.0) |

256 (91.1) |

156 (55.5) |

100 (35.6) |

281 (100) |

YES (91.1) |

YES (86.6) |

|

2F. On making a report |

Entirely Disagree |

Partly Disagree |

Disagree (Total) |

Neutral |

Agree (Total) |

Partly Agree |

Entirely Agree |

Total |

SEA Result |

EURO COVER STUDY |

|

1. Making a good report is a matter of talent: either you are able to make one or you are not. |

41 (14.5) |

62 (22.0) |

103 (36.5) |

47 (16.7) |

132 (46.8) |

97 (34.3) |

35 (12.4) |

282 (100) |

UNDE-CIDED |

NO (61.9) |

|

2. Learning to report should be an obligatory and well-structured part of the training of radiologists. |

3 (1.1) |

12 (4.3) |

15 (5.3) |

15 (5.3) |

251 (89.3) |

115 (40.9) |

136 (48.4) |

281 (100) |

YES (89.3) |

YES (92.4) |

|

3. Not taking into account their knowledge of radiology, staff radiologists make better reports than residents-in-training. |

18 (6.5) |

44 (15.8) |

62 (22.2) |

79 (28.3) |

138 (49.5) |

111 (39.8) |

27 (9.7) |

279 (100) |

UNDE-CIDED |

NEU-TRAL (58.7) |

![]() CC BY:

Open Access Creative Commons Attribution 4.0 International

License, which permits use, sharing, adaptation, distribution and

reproduction in any medium or format, as long as you give

appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if

changes were made. The images or other third party material in

this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material.

If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this

license, visit http://creativecommons.org/licenses/by/4.0/

CC BY:

Open Access Creative Commons Attribution 4.0 International

License, which permits use, sharing, adaptation, distribution and

reproduction in any medium or format, as long as you give

appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if

changes were made. The images or other third party material in

this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material.

If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this

license, visit http://creativecommons.org/licenses/by/4.0/